You should build your own eval tools, pretty much always

Invest in tooling early! It's not that hard and it makes a huge difference

Hi, I’m Taylor Hughes, CTO and co-founder of Hypernatural, the fastest AI video generation platform for storytellers. Before building Hypernatural, I shipped code at Facebook, Clubhouse, YouTube and a bunch of other places. Find me on Medium or LinkedIn.

TL;DR — Invest in eval tooling early! We built our own quality evaluation system to dramatically improve our video outputs. It’s not that hard, and if you’re building a service that outputs video or uses multiple generative models simultaneously, you should probably build your own system too.

Even shorter TL;DR: turns out Greg Brockman was right (assuming you have the right tooling).

Here’s a quick example before we dive in: the first row is our first test output after integrating with Pika, a video generation foundation model; the second row is the system we shipped with after dozens of rounds of improving the integration to turn the outputs into something our users are excited about:

The rows in the image above represent complete production video outputs, rather than a collection of random entries in the eval set. Here’s the same, unedited eval run results compared with what a user would see in the Hypernatural editor:

Diving in...

My co-founder and I are product people, so, if we’re being honest, we weren’t initially super interested in doing in-depth model evaluation. Most of all, we want to build user-facing features and experiences to help creative people have fun telling their stories.

But in building Hypernatural, we realized that every time we changed one layer of inputs or outputs in our generation process (e.g. a prompt, a model, a pipeline), it was too hard to understand how the entire system changed. Small changes—for example, editing the prompt logic for defining the characters in a story—can have dramatic ripple effects across the entire generation pipeline. (One time we inadvertently turned many of our outputs into accidental horror stories by editing a negative image prompt! Whoops.)

It became clear: In order to make exciting videos consistently, we needed to first build internal tools to allow us to run repeatable multi-modal system evaluation.

This is not just a matter of assessing model quality. In practice, there are 3 different problems we care about when we run system evals:

Prompt quality: how well do our current prompts (e.g. our logic to generate a custom video style based on an image input) work in a production-like environment, and what happens when we change them?

Model quality: how well does a given model work (e.g. a Flux LoRA) work on production-like inputs, and how do the settings (e.g. guidance scale) and prompts impact the outputs?

Performance/latency: how long does a given part of the pipeline (e.g. storyboard creation) take, and how reliable is it based on the inputs?

These are not separable problems. The quality of the prompts impacts the quality of the model outputs which changes the tolerance for longer inference times. These problems are compounded when different steps of the process cover wildly variable user inputs and toggle between text, images and video.

So we built some incredibly useful internal tools to help us understand the whole system, end to end, with real-world example inputs. This way we know for sure that when we ship a change to our prompts, models or hosted providers, end-user quality is consistently improving.

Just to say it again: if there’s one thing you should take away from this post: Invest in eval tooling early! We’ll share a bit about why these tools are absolutely necessary, and how to build them if you find yourself in a similar position.

Background: How Hypernatural works

To generate a video in Hypernatural, we built a streamlined pipeline that seamlessly incorporates various generative models focused on text, audio, images and video. The behind-the-scenes technical details are largely invisible to the user, who instead inputs (or creates) a script, selects (or generates) a style and characters, and gets an editable, ready-to-share video.

All of this also needs to happen fast: our mantra is that the user should not wait more than a minute (p50) to see something awesome. The video that comes out has to be good enough, often enough, that folks actually want to share it, continue to use the product, and upgrade to a paid plan.

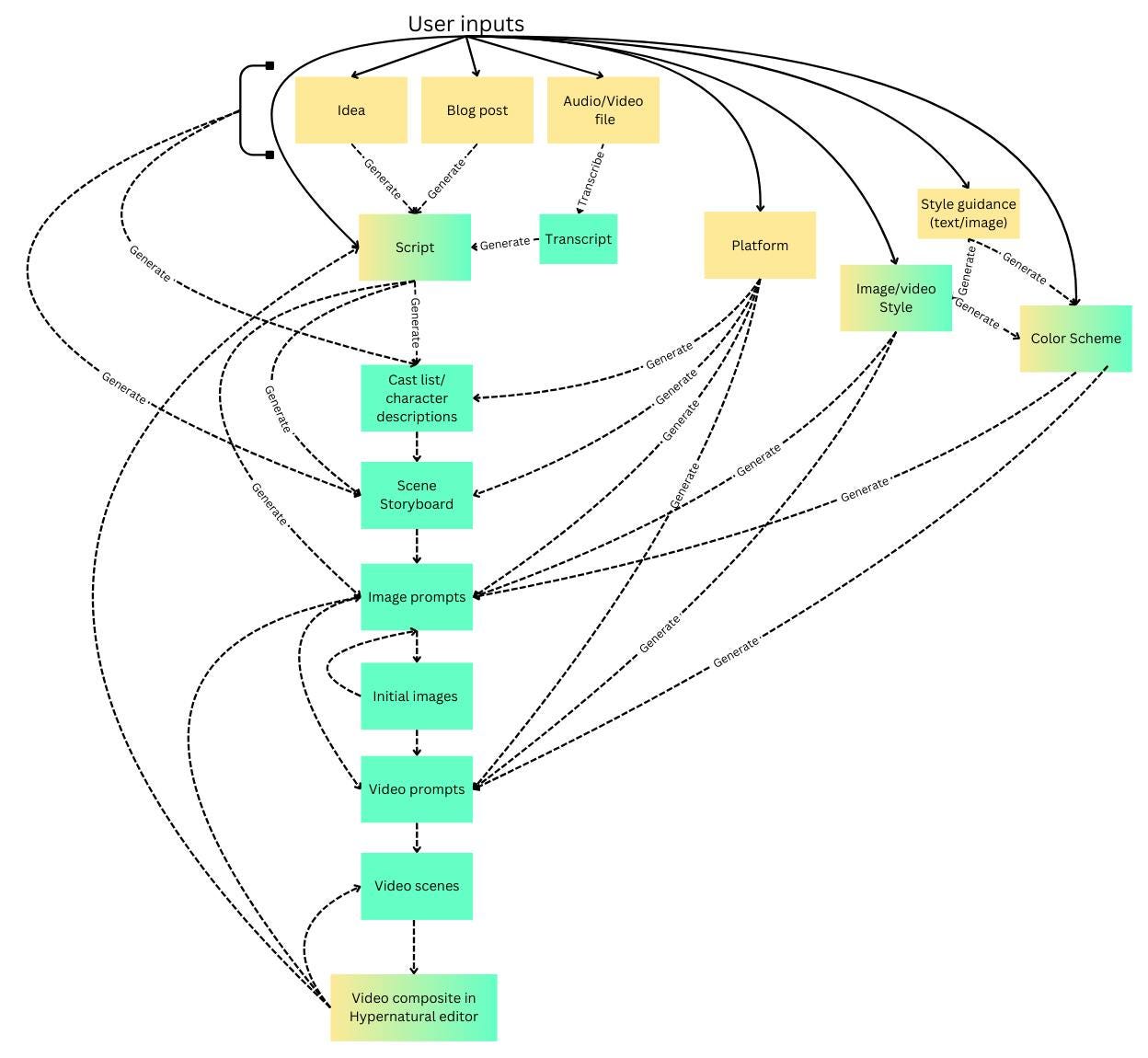

One of the biggest challenges here is getting the system to work across the matrix of diverse inputs, multiplied by the wide range of options users might choose: story genres, image styles, and of course the underlying generative models. Hypernatural lets folks adjust all these parameters, which leads to a huge variety of potential outputs. Here’s a simplified picture of the current user flow. Things in yellow are user inputs. Things in green are generated by our system (and things in both colors can be either user inputs or generated outputs).

Managing the entire generation funnel, including multiple loosely controlled user inputs that come in at different times—from idea, script, and voice generation, to style and character creation, to storyboarding and scene creation, to image and video generation and editing—requires knowing what will happen to the whole system if any part of it changes.

Rolling our own eval system, using our favorite (boring!) technology

This is where strong system evaluation comes in. When we realized last year that we needed to invest here, we looked around for an off-the-shelf eval tool, but ultimately decided to build our own, for a variety of reasons (more on that in the next section).

What tools have we built

So far we have 3 tools that can run on different parts of our model stack or be chained together for an end-to-end picture:

Ideation, script creation and categorization (text → text),

Image generation and styling (text + image → text + images)

Video model evaluation (text + image → text + image + video).

One of the benefits of rolling our own system is that we could do it in the most boring/efficient way possible. We built it in our app’s Django admin, and we can pipe production prompts and production clip data into it at any step.

The Django admin is one of our favorite pieces of the stack: It’s very familiar to our team, because it was leveraged heavily by previous projects we’ve worked on — LaunchKit, Clubhouse, PREMINT and many others — and it’s infinitely hackable! The perfect internal-only place to build some quick, unpolished, custom UI.

Here’s what it looks like for a video model run, with the actual prompts stripped out:

How we use our eval tools.

An eval ‘run’ for us consists of three things:

A set of representative inputs (e.g.: a set of scripts for sci fi stories that mimic our users’ scripts)

A collection of prompts that will run on the inputs, either in parallel or chained (e.g. a text-to-text prompt to create the storyboard based on the script, followed by a text-to-text prompt to create the image generation prompt for each item in the storyboard, followed by a text-to image prompt to generate each image in the story)

A collection model choices and settings for each prompt (e.g. OpenAI vs Claude, SDXL vs Flux).

This produces a collection like the one shown above, which we then review manually. In general, when we’re shipping a change (for example, migrating our baseline image generation to FLUX.1-schnell from SDXL), we’ll do a dozen or more eval runs: first to tune the prompt logic on a general set of inputs, and then to test out the the impact of the change on different popular image styles and content genres.

It’s not always a precise process. Sometimes we’ll test a new idea (e.g. adding a distinct step to define the characters in story), then spend 10 eval runs tweaking the rest of the prompts to unwind the new problems that get introduced along with the benefits, before realizing that the the initial idea belongs in a completely different part of the pipeline. In the wise words of Richard Feynman: fiddling around is the answer.

Show me an example.

We used the set of evaluations shown above to hone our video generation prompts and settings for different image types and styles and to determine when and where to deploy Pika vs SVD generations automatically, based on which styles and images perform best. Here’s another before-and-after example from our Pika integration:

In our first run, we used Pika’s text-to-video generation directly and created very simple prompt guidance based on our SDXL image prompt generation logic. But we quickly learned that Pika performed significant better, and that we could control the style more closely, if we passed in high-quality images. We also spent a whole bunch of cycles tuning Pika’s movement and guidance settings and our own image and video prompt generation logic to produce to produce the highest-performing prompts.

It’s not just for video!

Digging into a different example. Below is the difference a comparison between SDXL, our first run with Flux, and what we’re shipping last next week for our popular 8-bit, retro video game image style.

On our first run, we tried re-using exactly the same prompts and style guidance that we’ve developed for SDXL. As you can see above, the adherence to the prompt is already better in the first run with Flux (where’s Lila in the second SDXL image, and why is that third image in space?). But Flux also loses the pixelated quality we’re looking for and veers frequently toward a more cutesy cartoon/anime style. After a few rounds of edits, we were able to consistently solve this problem via a mix of updates to the style guidance and updates to the base image prompt generation logic.

Model outputs don’t exist in a vacuum.

Beyond the improved results, the important thing here is that we can also visualize the storyboard of the whole video. If you look at the eval tool in comparison to our in-product editor UI, you’ll see that our eval interface is effectively a way of viewing all the narration and scenes of the video simultaneously along with the path through various model inferences for each scene. Here’s the video again. Note that in the eval tool on the left, you cans see the scene text (aka captions), image prompt, video and video prompt for each scene.

Because this pipeline exists within our code stack, we can also run evaluations on real-world data sets easily, which means we’re always shipping changes that improve what our users actually care about, as opposed to making generations better for our idealized version of reality.

Existing eval tooling doesn’t cut it for multi-modal systems

Model infrastructure, including eval tooling, is a ripe space for innovation (and, VC investment) right now. We looked at a bunch of services out there, including paid hosted solutions like LangSmith, Bento and Braintrust, open source options like Langfuse, and a bunch of smaller start-ups building here.

But we ran into a few problems:

Auto-scoring for visual outputs (i.e. images and video) is an unsolved problem. Sure, things like visual artifacts can be detected, but the definition of ‘good’ for video outputs, especially more stylized ones, is subjective. The rise of UGC short form video has definitively proved that production value is only loosely related to quality.

There’s nothing out there that works well when both your inputs and outputs span multiple modalities across text, images and video.

Production prompts and workflows inevitably drift from prompts tested in a vacuum. You’ll add fixes for different potential inputs and outputs, you’ll transmogrify user inputs in different ways to tweak the end result — and if you’re evaluating prompts and results without these bits of code, you won’t be able to repro the quality you see in a separate eval system.

Every tool we looked at assumes a 1-1, linear mapping between inputs and outputs. Even if you can chain models together across modalities in a playground, this linear path does not represent the output we care about, which is a video that spans multiple scenes with multiple shorter images and videos.

This last point is the most important. Hypernatural videos are made up of scenes, which all have to work together, both with each other and with the whatever narration, captions and transitions are layered on top. Rather than a linear path run on a set of inputs, what we’re really after is something more like a tree, where we can see the impact of a change across the whole track of branching outputs simultaneously. There’s just nothing out there that handles this problem effectively.

The Takeaway: You should really build some eval tooling!

What did we learn from all this? If you’re building a product that uses AI to create images or video, here are a few takeaways:

Invest in eval tooling early. Hypernatural videos took a quantum leap forward after we shipped our first and simplest tool for evaluating the quality of our auto-generated image prompts.

You should probably build your own tools. The reality is that, for multimodal pipelines, the tooling just isn’t there yet. Don’t let this be an excuse to put off running output-based evaluation today.

Added benefit: you don’t have to send your precious data to yet another startup with questionable data policies.

Run your system on real-world data sets. I’ve met a lot of people in the video space who are focused on idealized inputs and outputs. To them I say: the road to hell is paved with Sick Demos that took hours of cherry-picked regenerations to produce.

Don’t underestimate the value of human review in an interface that mimics the way that users will interact with the outputs. As mentioned above, the definition of ‘good’ for generated video is unsettled and, say it with me, production value is not the same as quality. But more than that, the output is only good if it’s is good in the context of your product. This doesn’t mean you have to build your eval tools inside your product UX (you probably shouldn’t do that either). But taking steps to make sure that your eval outputs represent real-world outputs visually makes it that much easier to assess if they’re actually working for your users.

Scaled evaluations for every step of our pipeline with real-world, multimodal datasets are the only reason Hypernatural can produce stylized, finished videos that people actually want to share in minutes.

We hope you can learn something from our bumpy (and ongoing) road to awesome video generation!

Thank you for reading! Special thanks to Robert Ness, Rushabh Doshi, Glenn Jaume, and David Kossnick for their advice on earlier drafts.

Interested in learning more? Check out our website, go make some videos, and follow us on LinkedIn or Twitter!

Thanks for the huge learning! Curious does any chatter for eval tools involve measuring financial cost as well? Considering the details of performance and model quality, not sure if there some tinkering there for output of efficiency with cost as well.